Incrementality testing, also called uplift modeling and incremental lift testing, answers the age-old question, "Does this advertising initiative really boost conversions?" It's a tool we use to evaluate the success of a marketing initiative. You can use this testing methodology to compare the business outcomes from a test group with the results from a control group (also called a holdout). Incremental lift gives you a way of calculating the additional conversions that result directly from your advertising campaign.

Don't worry! This does require math, but the formula is easy. As long as you have a working knowledge of sixth grade algebra, you can do this calculation in a flash. Bad at subtraction and division? That's what unpaid interns are for!

Measuring incremental lift allows you to peer beneath the veil of pixel-based attribution modeling, revealing where sales data may be padded by advertising platforms. Instead of looking at your last touch attributions in Facebook Advertising and the Google Search Console, incrementality testing requires that you use your own internal sales metrics. This leaves a lot less room for funny business, double-counting, and sleight-of-hand.

Plus, this form of testing works well for some of the harder-to-measure marketing goals such as brand awareness. It can even be used to evaluate old-school marketing channels. So get ready with some flyers, classified ads, and direct mailers. It's 2021, the year we measure everything—not just clicks.

Picture this scenario—you work at company that’s running a complex, multi-platform promotion. Ultimately, you probably care less about the minutia of tracking attribution than you do about quality of the conversion. The most important question you need to answer is this:

Did the promotion generate a sale that wouldn’t have happened otherwise?

Incrementality testing gives you a way to answer that question.

Beyond that major "win”, there are a few other benefits of incrementality testing. One of the interesting things about the incrementality mindset, in my opinion, is that it can embolden marketers to take more chances with creative. At the same time, this type of testing encourages a more scientific approach.

By building a very structured test—thinking through any confounding variables—you avoid some of the pitfalls common to ongoing digital marketing campaigns. After all, it's hard to evaluate your e-commerce ads when your coworkers are tinkering with the checkout flow, product branding, and website design. And, normally, you’re not going to pull the plug on search ads . . . even when all hell is breaking loose with your website. As you’ve probably noticed, this can lead to some very wonky A/B test results.

At the end of the day, measuring incremental impact requires a significant investment of money and time. Your team needs to commit to the idea of setting up controlled experiments. Seriously, gather your teammates together and extract pinky promises: I solemnly swear to do everything in my power to support this controlled experiment.

We love incrementality testing because it brings potential pitfalls to the surface. Oh, we're planning a full website relaunch? Maybe we should hold off on tests! Those are the conversations that you want to have with your team—and that you sometimes miss when you're running small potato tests with regular attribution modeling.

Since lift studies require careful set-up and data analysis, you know that your organization is likely to learn something from each test—even if you don't see an increase in incremental conversions.

In order to build a successful incrementality test, it's essential to test a large enough sample size. But how do you know just how big your sample size needs to be?

Here's a quick hack borrowed from web optimization testing. Optimizely offers great instructions for setting up an experiment based on the minimum detectable effect (MDE) on conversion rate that you're looking to confirm. To set this up, you need to know your baseline conversion rate, the minimum detectible effect that you're looking for (a bigger MDE requires less data), and the statistical significance for your experiment (95% is standard). Input your specs, and the Optimizely tool will tell you what sample size you need.

In some cases, this process may prevent you from initiating a test that is doomed to fail. We love dodging a bullet; it makes us feel like Neo in The Matrix. You may do the math, only to discover that reaching a large enough sample size would be too costly. For example, if your business has a long purchase cycle or a very low conversion rate, you might want to consider setting up your incrementality testing around a secondary KPI or business result, something other than purchase conversions.

Experiments will be most successful when you have two very different marketing strategies to test—as long as you remember to test one variable at a time. Distinct treatments usually allow your team to achieve statistically significant results faster with less ad spend and a smaller sample size.

As an example, incrementality testing Facebook marketing vs. no Facebook marketing would probably yield very clear results quickly. The difference should be stark. On the other hand, when you compare running a campaign that includes one single ad variant vs. running the same campaign without it, the experiment will likely take more money, more time, and a larger sample size—because you'd be looking for a very small MDE.

So, the bigger the difference, the better the test. We suggest you use incrementality measurement to compare distinct messaging, ways of distributing content, or other striking optimizations. By testing your biggest, boldest variables first, you'll maximize the impact of your testing budget.

Hopefully, this advice inspires you to test something bonkers . . . After all, it just might work.

In some cases, incrementality tests may lead to exciting downstream discoveries that you don't expect. This can be a great learning tool, allowing you to understand more about how your customers behave.

Keep an eye out for differences between your control group and test group. These can include:

Often, these kinds of unintended results enable you to spot important optimizations and find amazing opportunities for business growth.

As an example, we can share a story about one of our clients, a major direct-to-consumer brand that's a household name. Together, we uncovered some crazy learnings about the impact of ad placement on conversion channel.

They had been spending loads of money on an audio streaming platform, using banner ads. Unfortunately, those ads did not produce a very healthy return on investment. Let’s just say that the results were milquetoast. They weren’t bad enough to pull, but they left a lot to be desired.

One of our analysts offered a great idea, suggesting that the client try testing remnant audio ads. That would allow them to reach the same customer base with a less expensive placement. There was one problem with this, though. Almost no users were clicking on the ads. As a result, if you looked at a click-based attribution model, the switch to remnant ads showed almost no value.

We added a promotional code to the audio messaging and ran a paired city test to measure the uplift in organic revenue.

Shockingly, that allowed us to spot a large number of users signing up organically wherever an audio ad was running. We also saw a corresponding increase in promo code redemptions. Altogether, when we reviewed the performance of these audio ads, we observed 3-4 times more ROI from remnant ads compared to the control markets.

The discovery absolutely floored our client. These findings allowed us to arbitrage between the remnant and banner markets. Meanwhile, most direct response advertisers remained blind to the opportunity. Clicks weren’t telling the whole story because remnant ads shifted the conversion channel.

Crunching the numbers to determine incremental lift couldn't be easier. Seriously, try it yourself before you loop in Bill from accounting. Simply take the test group conversion rate and subtract the control group conversion rate. Divide that number by the test group conversion rate.

We wish we could take credit for this useful formula, but we didn't make it up. It's the standard equation for relative change.

Here's the exciting thing. You can use the same calculation to determine the incremental lift of anything: clicks, comments, add to carts. The list goes on and on. Make a date with your calculator. Before long, you're going to be up close and personal with the incremental impact of all your marketing decisions. See how your choices affect every KPI that matters (and probably a few KPIs that don't).

Notice that this formula does not take your budget or reach into account. Usually, we give the control and test groups similar budgets, but that’s not entirely necessary. The most important constraint is that all groups should have a similar ad frequency. Even if you’re comparing different marketing platforms, let’s say incrementality testing Google vs. TikTok ads, try tp ensure that each audience has similar awareness levels by the end of the experiment and that your ad delivery was consistent for the test and control groups.

We've outlined a few of the most common ways that marketers set up incrementality tests below. Of course, you may be able to come up with even better ways to set up your experiments. If that's the case, cool! We couldn't be prouder.

As with scientific experiments in a lab, coming up with the smartest experimental design is half the fun.

You’ll often find that the digital ad platforms that don’t offer incrementality testing options—cough, Google UAC, cough—may have something to hide. If there’s no internal way of setting up an incrementality test, don’t let that stop you from designing a great experiment.

For instance, we once ran a blank video ad as a control against real video ads as a way to prove that the video conversions were cannibalizing organic installs. (They totally were.)

The moral of the story: when an advertising platform gives you lemons, don’t be afraid to bring your own tequila to the party.

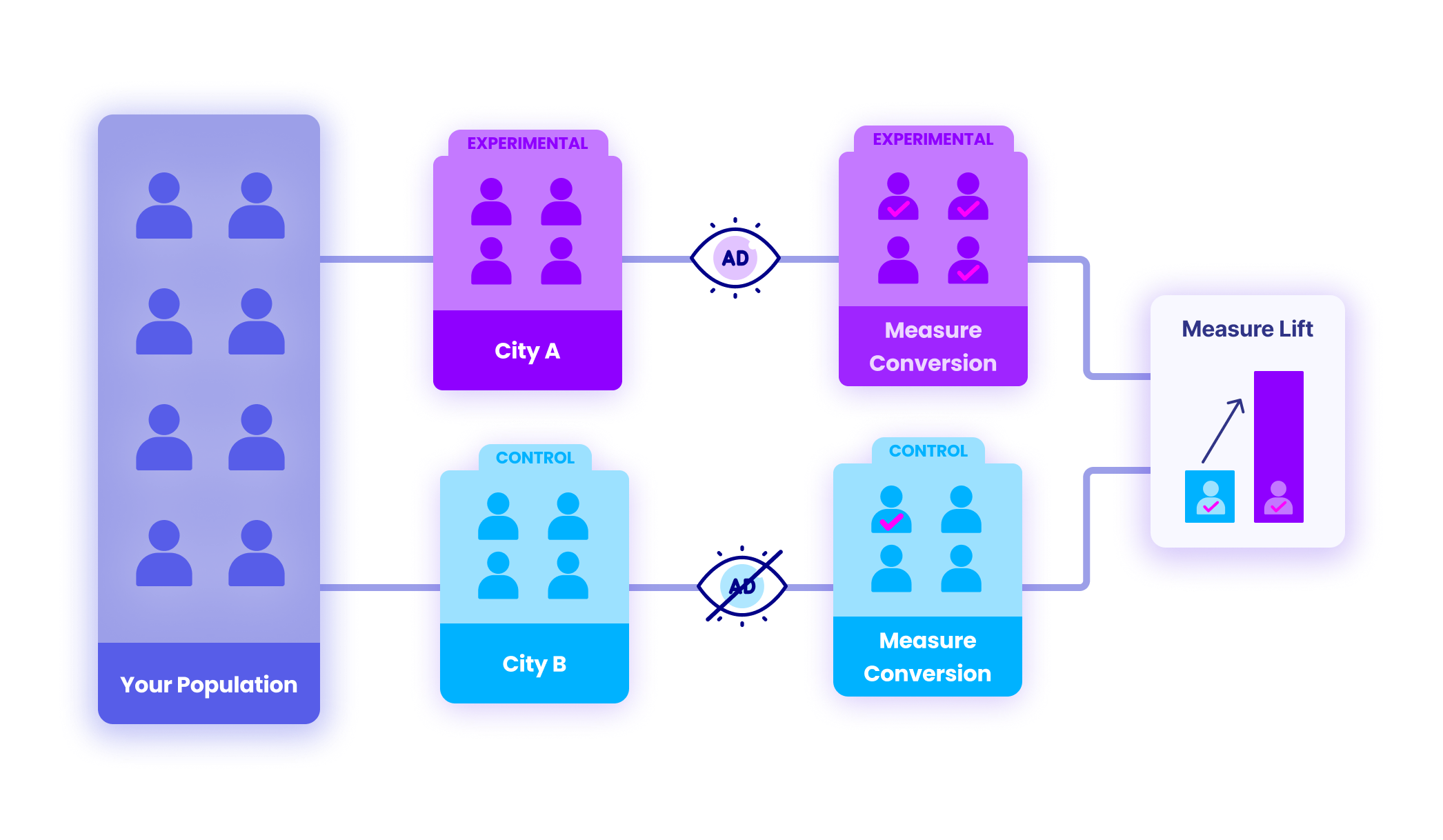

To arrange a split-test based on geography, you simply need to find two areas with similar populations. It helps if they have the similar characteristics, such as population size, behavior, market density, gender distribution, ethnic makeup, etc. Of course, no two places are exactly identical, but try to use good judgement.

No—California and Vermont are not a perfect match. Brazil and Kazakhstan? Think again.

Make one city/region/state/country your test group. The other city/region/state/country is the holdout. Next, expose the test group to your awesome new marketing strategy. Measure the lift.

Facebook offers incrementality testing within their platform. Rather than setting up the control and test group yourself, you sit back while Facebook does the heavy lifting. They automatically divide your audience into two (nearly) identical groups, then they only show your ads to the test group. They make it easy to set up, and the results show you the incremental conversions and revenue from your experiment. We rate this 10/10 for ease of use.

Unfortunately, because these tests only work for Facebook and Instagram, you can't experiment with a cross-platform campaign—for instance, you can't distribute content across all the online advertising that the test group will see. Therefore, a test customer is likely to observe different branding or messaging as they move across the internet.

One of the simplest ways to segment your audience is with time cohorts. For example, imagine you wanted to know the incremental lift from a last touch campaign (Campaign A) designed to drive immediate conversions on branded search. Well, that's easy. Run Campaign A for an hour with an appropriate budget to reach your desired sample size. Then turn it off.

That's right. It may sound radical, but we advise you to turn off Campaign A for one hour. Keep your ad frequency consistent. The people searching for your brand will likely have a similar demographic breakdown compared to the folks who searched for your product one hour earlier. So, you're effectively comparing the first group, which was exposed to your branded search marketing campaign (Campaign A), to the second group, which acts as a control.

This functions best with branded search, but you could also use a similar technique for any other last touch marketing channel, such as YouTube, Facebook, etc. Be aware that your results will be channel specific, and you can't really examine any long-tail or second-order effects with this methodology. Also, your brand needs a significant search volume to make this a feasible option.

Sorry, small businesses, you’ll probably have to sit this one out.

If your company has a large email list, you can use your leads for both your test and control groups. Divide the list into two segments (or more, depending on how large your list is).

Remember to divvy up your list by taking randomized samples. Why? Well, have you ever noticed that everyone named Brett acts like everyone else named Brett? And, Lord knows, enough has already been written about Karens . . . That's why alphabetical order isn't going to cut it.

Luckily, computers can make random selections lickety-split.

Most email marketing platforms allow you to export a randomized email list, but you can also create them manually in Excel. Just add a column with the RAND() function and then sort your emails accordingly.

Once you have two (or more) neat and tidy email lists, you can use one as the test group and the other as the control. The benefit of this form of incrementality testing is the opportunity to run cross-platform campaigns. For example, you can test a discount variant using email marketing, Facebook, Instagram, Youtube, and search ads all at once. Hell, throw in any other advertising tool you want, too, as long as it allows you to upload custom audience lists. You can also run multiple test groups against the same control.

The downside to this method is that, even for companies with large email lists, your leads are still finite. You may not want to scare away your best prospects by introducing radical shifts in strategy or off-the-wall creative. This method also isn’t ideal for evaluating the success of a prospecting campaign. Your email list contains people who have already moved further down the funnel, so they won't make the best audience for top-of-funnel awareness campaigns.

The attribution model relies on cookies to "follow" a customer across the web. When the customer makes a purchase from your business, the advertising platform console shows you the touch points that may have led to that sale.

Notice how we're careful to say that the ads may have led to the sale. No matter which attribution model you use, which may include "click through," "first touch," "last touch," "multi touch," "view through," and more, the console data only suggests the ad caused the sale.

Any scientist will tell you that correlation is not causation. That distinction is terribly important, whether you study orangutans or digital purchase habits. At the end of the day, there may be a relationship between the ad impression and the purchase, but that relationship is not necessarily causal. There could be a lot of confounding variables. The ad may not be responsible for the sale at all. The ad view and the purchase are linked together in time, but attribution model doesn't examine the nature of the connection.

Like a small child learning not to touch a hot stove, the incrementality tester studies cause and effect in action. With this kind of mindset, you do more than track the results as they happen. Instead, you play a more active role, designing tests to prove or disprove a hypothesis.

on, you have the opportunity to approach any variable in your marketing strategy (including brand awareness and word of mouth) as an A/B test. What happens when you press pause on one element? Your sales may plummet, or you may observe no impact on the business at all. By setting up thoughtful experiments, you'll be in a better position to identify true causation—both in your digital ads and across the entirety of your business.